Springbound Devlog 4: Making critters talk

A deep dive into sound designing a language and dynamic vocals for critters using FMOD

Hey! I'm Bryce, a solo game developer and creator of Springbound. This is part of a series of deep dives exploring interesting aspects of the game and sharing lessons learned along the way.

Here’s the whole series if you’d like to read more: Springbound Devlogs

This deep dive is going to be a little different than the others. Instead of tech art, we’re going to explore sound design!

We’ll explore the process of giving the characters in Springbound a voice by designing the critters’ vocal patterns, creating a synthesizer from my own voice, and implementing real-time spoken dialogue in the game using FMOD, a tool for authoring and implementing game audio.

Animalese and other dialects

The Animal Crossing series pioneered the cute animal gibberish in games. “Animalese” is the language spoken by all its characters. It’s charming and adds genuine depth to interactions with the characters—it feels like they’re speaking directly to you! Even though the words are mostly unintelligible, you understand their tone and meaning, which makes conversations feel more real. Here’s what Animalese sounds like in Animal Crossing: New Horizons:

It’s actually not gibberish at all! The system rapidly strings together recordings of individual letter sounds to create speech. Characters speak each letter of each word aloud, forming that distinctive Animalese dialect. If you slow down the audio and listen carefully, you’ll notice that many English letters are mispronounced, but this adds to the charm! (It’s more accurate with Japanese phonetics since this was the game’s original language)

Other games use variations of this, like Stray (with a more complex robot language) or A Short Hike (simpler, using beeps that play as words appear).

These dialects sound realistic, and work well in games with animals running economic systems or robots communicating, but they feels too structured for my lighthearted game about forest critters. I like the general approach to gibberish and sound, but want something gentler and closer to animal vocalizations.

I scrapped together a simple voice system that played one-shot animal calls for each line. This was a lot of fun (did you know that wild turkeys have a huge variety of calls?) but it ultimately sounded random and inconsistent. We needed something consistent enough to sound charming without being distracting.

Turning my voice into a synthesizer

Hey, why not use my own voice as a starting point for these critters? If you know me you know I was already making these sounds while playing through the game anyways 🫣

Here’s a short clip from my critter vocal audition (recorded while alone at home, trimmed to the least embarrassing part):

Cringe 😅 But this gives us a decent starting point. We aren’t recording direct dialogue for each line—instead, we need to transform this one recording into a sound that can dynamically stretch to match any dialogue line in the game since they vary in length. How do we do this?

One trick is isolating a “pure” sound from a recording—that is, a consistent sound at a consistent pitch that correctly captures the timbre of the sound without unnecessary noise or details. This will be a good starting point for further manipulation.

The easiest way to do this is by chopping up the recording to find a single cycle—a single repetition in the waveform that makes up this sound we’re trying to capture. If we isolate a cycle and repeat it endlessly, we’ll hear a synthesized sound that captures the character of the sound. Let’s zoom in on the whole waveform to find one of these:

This unique waveform combines many characteristics—the timbre of my voice, the pitch and intensity of the sound, and the phonetic sound “mmm”—to create a sound with a distinct character that will soon become a critter’s voice!

If we take that single sample and loop it, we hear this:

Neat! The sound is pure but has a robotic, synth-like quality that loses some of the warmth and breathiness of the original. This happens because much of the sound’s character extends beyond a single sample, and we’ve lost that here. A good compromise is to use a longer (but still loopable) sample. This is what it sounds like if we take ~40 samples and loop it:

The sound retains more of its vocal qualities compared to the single-sample version. This feels good enough to bring into the game!

Dropping our first voice into the game

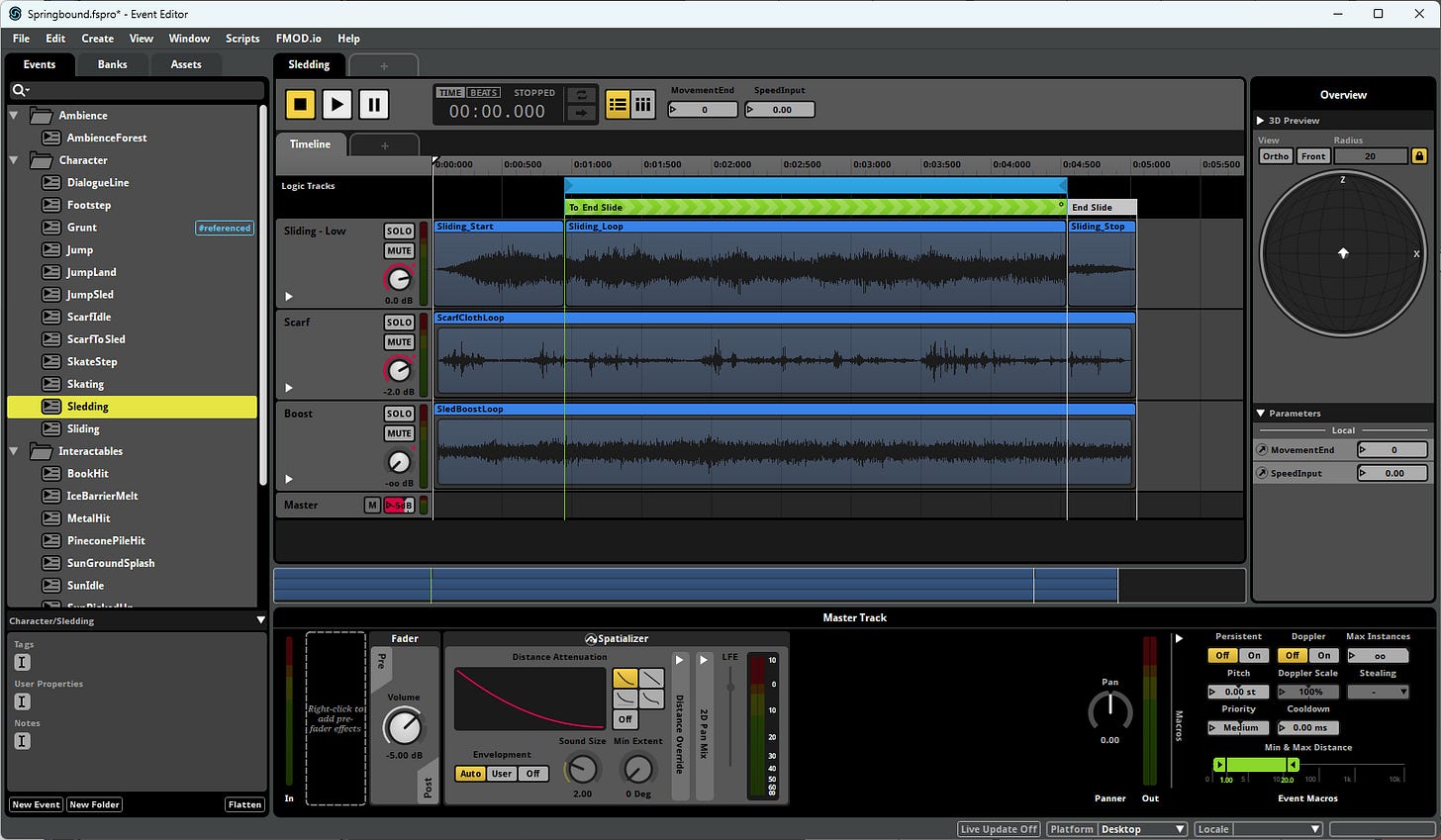

Springbound uses the audio middleware FMOD. It’s excellent for creating procedural, interactive audio and lets you manipulate sounds in response to gameplay with many effects and ways to combine and layer sounds. I’ve used it in prior projects and it’s free for indies if your revenue is < $200k per year!

FMOD might look familiar if you’ve used another DAW like Ableton Live or Logic. Here’s what the FMOD editor looks like for the sledding audio effect in Springbound:

The dialogue line audio event is much simpler. It’ll just be a single looping clip with the sample we isolated above. I’ll add into the game to play whenever someone starts talking, and also change the pitch depending on which character is talking, in a range of 7 semitones (a half step in musical terms).

Now, here’s what it sounds like in the game:

🙉 Ehh…not great. It has the basic character we want, but it lacks any variation of spoken words and breaks between sentences. The sound doesn’t change at all even as the characters’ mouths open and close. We can do much better!

Making critters speak with vocal inflections

Let’s tackle the low-hanging fruit first. I mentioned above how this lacks the variation and intonations of spoken words. We’ll change the pitch of the voice every time a character starts speaking a new word, adding natural inflection and variation:

Much better! This uses a pitch slide that transitions to new pitches over a tenth of a second to make those changes sound more natural.

But we can still do better! One big missing piece: it lacks any variation in timbre. We’re used to phonetic coloring like “eee” and “aah” and “mmm” of vowels and consonants while listening to spoken language, and our critter voices have none of that.

Lucky for us, the field of signal processing has an earload of research into how to create this coloring by simulating the way vocal tracts resonate. Which brings us to a new technique we can use: ✨Formant filtering✨!

Making critters speak in vowels

You might have heard of a wah pedal which makes a guitar sound like it’s making a “wah-wah” sound (listen to the intro of Livin’ On A Prayer). A Format filter is similar but more capable!

Formants are the harmonic frequencies in the sound of your voice that get amplified by the shape of your mouth and throat. These amplified frequencies are what make your unique voice sound like you, and are also responsible for creating vowel sounds.

Formant shifting is when you manually boost certain frequencies in a sound (not necessarily a human voice) to adjust the sound’s timbre to create vowel-like coloring in the sound. For example, boosting a the first two formants might create an “ooh” or “ehh” sound. It’s fascinating to see what our ears pick up on! Here’s a great explainer if you’d like to learn more.

Let’s define a simple “dialect” for the critters:

Critters will be able to say two vowels: “ooo” and “aah”

Let’s call this parameter “Syllable” and drive it from the individual letters in a dialogue line.Their mouths open and close between words to delineate them

Let’s call this parameter “Mouth Open” and say it’s open when words are being spoken in the dialogue line and closed when not.

Now that we’ve defined this dialect, we’re ready to formant shift 🏄! We already know the sound to be processed and can figure out where its formants are, so all we need to implement this in realtime is a multiband EQ! I set up a few filters in FMOD’s built in EQ:

A low pass filter automated by the “Mouth Open” property which does a low pass down to ~280 Hz when the mouth closes

A notch filter (attenuates frequencies in a specific range) over the first formant that’s automated by the “Syllable” property

A peaking filter (boosts frequencies in a specific range) over the second formant that’s also automated by “Syllable”

After one final touch of adding a pitch LFO for some subtle vibrato while speaking, the dialogue sounds like this:

This sounds SO much better! 🥳 The vowel sounds change frequently within each word, adding lots of interesting variation. Between words, the muffled character creates more realistic divisions in speech. Delightful!

After several iterations of experimentation, we’ve created a dialect and voices for our critters! After starting with a simple voice recording, then adding in procedural sound manipulation with pitch variations and formant filtering driven by dialogue, we have voices that feel natural and match the playful spirit of Springbound. I had a lot of fun working on this part of the game, as you can probably tell!

Thanks for reading! In the next deep dive, learn about the animation process of bringing these critters to life: